About the Author: Rudolph Marcus Sinclair

Dr. Rudolph Marcus Sinclair is a distinguished expert in counselor education and training, with over 25 years of experience in academic and clinical settings. He holds a Ph.D. in Counseling Psychology from Michael University and has published extensively on counselor training, multicultural competence, and evidence-based practices.

A respected faculty member, Dr. Sinclair teaches graduate courses in counseling theories, techniques, and supervision at a leading university. His work emphasizes cultural competence and social justice in counseling, and he is a sought-after speaker at national and international conferences.

In addition to his academic role, Dr. Sinclair is an active practitioner providing counseling services to diverse populations. Committed to mentoring future counselors, he has received several awards for his contributions to the field, solidifying his status as a prominent figure in the counseling community.

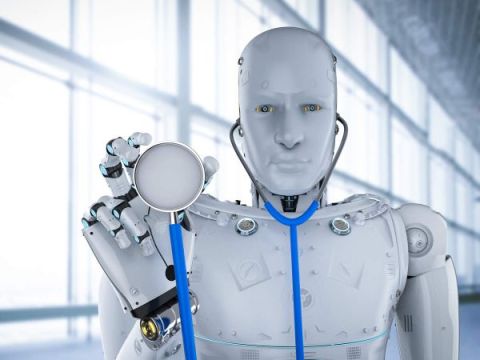

The Rise of Robot Therapists: How AI is Revolutionizing Counseling

Artificial Intelligence (AI) has increasingly permeated various aspects of human life, including the field of mental health. This paper explores the rise of robot therapists—AI-driven applications designed to provide counseling and psychological support—and how they are revolutionizing the field of counseling. Through an analysis of recent advancements in AI technology and their application in therapy, this paper discusses the potential benefits and challenges of AI in mental health care. The paper concludes with recommendations on integrating AI into traditional therapeutic practices to enhance accessibility, effectiveness, and overall patient care.

Introduction

The integration of technology into healthcare has brought about significant changes, particularly in the field of mental health. The emergence of AI-driven tools, such as robot therapists, has opened new possibilities for delivering counseling services. These AI applications, often powered by sophisticated algorithms and machine learning techniques, are designed to simulate human-like interactions, providing users with psychological support, coping strategies, and even cognitive behavioral therapy (CBT) sessions. The rise of robot therapists signifies a transformative shift in how mental health services are delivered, making therapy more accessible and personalized.

However, as with any technological advancement, the rise of AI in counseling raises critical questions about the efficacy, ethics, and potential limitations of robot therapists. This paper aims to provide a comprehensive overview of AI’s role in revolutionizing counseling, examining both the promises and the pitfalls of this emerging trend.

The Evolution of AI in Mental Health Care

- Early Beginnings and Technological Advancements

The concept of using machines to simulate therapeutic interactions dates back to the 1960s, with the development of ELIZA, one of the first computer programs designed to mimic a therapist’s responses. Although ELIZA was a simple program, it laid the groundwork for more advanced AI systems in mental health. Over the past few decades, significant advancements in AI technology, particularly in natural language processing (NLP) and machine learning, have led to the development of sophisticated robot therapists capable of understanding and responding to human emotions.

Recent AI models, such as GPT-3 and its successors, have further advanced the capabilities of robot therapists by enabling more nuanced and human-like conversations. These models can analyze text input, interpret the emotional content, and generate appropriate therapeutic responses, making them valuable tools for mental health support.

- The Rise of AI-Driven Therapy Applications

AI-driven therapy applications, such as Woebot, Wysa, and Tess, are at the forefront of this revolution. These platforms utilize AI to provide users with 24/7 mental health support, offering personalized advice, coping mechanisms, and CBT techniques. For instance, Woebot, developed by researchers at Stanford University, is a chatbot that uses AI to engage users in conversations aimed at alleviating symptoms of anxiety and depression. The application adapts to individual user needs by learning from past interactions, allowing it to offer more personalized and effective support over time.

Similarly, Wysa combines AI with human support to create a hybrid model of therapy. Users can interact with the AI chatbot for immediate help, and if needed, they can be connected with human therapists for more complex issues. This hybrid approach represents a significant advancement in AI-driven therapy, as it blends the efficiency and accessibility of AI with the expertise of human counselors.

Benefits of AI in Counseling

- Accessibility and Convenience

One of the most significant benefits of AI-driven therapy is its accessibility. Traditional therapy can be expensive, time-consuming, and geographically limited, making it difficult for many individuals to access mental health care. AI therapists, however, are available 24/7 and can be accessed from anywhere with an internet connection. This convenience makes mental health support more accessible to individuals who may not have the time or resources to seek traditional therapy.

Moreover, AI therapy applications can serve as an entry point for individuals who may be hesitant to seek help due to the stigma associated with mental health issues. The anonymity and privacy provided by AI therapists can encourage more people to reach out for support.

- Cost-Effectiveness

AI-driven therapy is also more cost-effective compared to traditional therapy. Developing and maintaining AI applications is less expensive than providing in-person therapy sessions, making it possible to offer these services at a lower cost or even for free. This cost-effectiveness allows mental health organizations to reach a larger audience, particularly in underserved communities.

Additionally, the scalability of AI therapy means that it can be offered to a large number of users simultaneously, further reducing costs and increasing the potential for widespread impact.

- Personalization and Data-Driven Insights

AI therapy applications are designed to learn from user interactions, allowing them to provide personalized support tailored to individual needs. By analyzing user input, AI can identify patterns, track progress, and adapt its responses to better suit the user’s emotional state and therapeutic goals. This level of personalization is difficult to achieve in traditional therapy settings, where therapists must rely on subjective assessments and limited session time.

Furthermore, AI-driven therapy applications can collect and analyze large amounts of data, providing valuable insights into mental health trends and treatment outcomes. This data can be used to improve the effectiveness of therapy, identify at-risk populations, and inform the development of new therapeutic approaches.

Challenges and Ethical Considerations

- Efficacy and Human Connection

While AI-driven therapy offers many benefits, there are concerns about its efficacy compared to traditional therapy. Human therapists bring empathy, intuition, and a deep understanding of human behavior to their practice—qualities that AI, despite its advancements, cannot fully replicate. The therapeutic relationship, which is central to the effectiveness of counseling, may be compromised in AI-driven therapy, as users interact with machines rather than humans.

Additionally, there are questions about the long-term effectiveness of AI therapy. While AI can provide immediate support, it may not be able to address deep-seated psychological issues that require more intensive, human-led interventions.

- Privacy and Data Security

The use of AI in mental health care raises significant concerns about privacy and data security. AI therapy applications collect sensitive information about users’ mental health, emotions, and personal experiences. If not properly secured, this data could be vulnerable to breaches, leading to serious consequences for users. Ensuring that AI therapy platforms comply with data protection regulations and implement robust security measures is essential to maintaining user trust and protecting their privacy.

- Ethical Implications of AI Decision-Making

AI therapy applications rely on algorithms to analyze user input and generate responses. However, these algorithms are only as good as the data they are trained on, and they may reflect biases present in that data. This can lead to ethical concerns, as biased algorithms may produce inappropriate or harmful responses, particularly for users from marginalized communities. It is crucial for developers to continuously monitor and refine these algorithms to ensure they are providing fair and accurate support.

Moreover, the increasing reliance on AI in mental health care raises questions about the potential dehumanization of therapy. As AI takes on more responsibilities in counseling, there is a risk that the human aspect of therapy will be diminished, leading to a more transactional and less compassionate approach to mental health care.

The Future of AI in Counseling

- Integrating AI with Human-Led Therapy

The future of AI in counseling likely lies in a hybrid model that integrates AI-driven tools with human-led therapy. AI can be used to complement traditional therapy by providing continuous support between sessions, offering preliminary assessments, and assisting with administrative tasks. This integration allows human therapists to focus on more complex cases and build stronger therapeutic relationships with their clients.

Moreover, AI can be used to enhance the effectiveness of therapy by providing therapists with data-driven insights into their clients’ progress, helping them tailor their interventions more precisely.

- Expanding Access to Mental Health Services

AI-driven therapy applications have the potential to significantly expand access to mental health services, particularly in underserved areas where mental health professionals are scarce. By providing remote and on-demand access to counseling, AI can help bridge the gap in mental health care, reaching populations that might otherwise go without support. For instance, rural communities, low-income areas, and countries with limited healthcare infrastructure could greatly benefit from the widespread deployment of AI therapy platforms.

Moreover, AI can assist in scaling mental health services during crises or pandemics, as seen during the COVID-19 pandemic, when the demand for mental health services surged. AI-driven platforms can handle large volumes of users simultaneously, providing critical support when traditional services are overwhelmed.

- Continuous Improvement and Innovation

As AI technology continues to evolve, so too will the capabilities of robot therapists. Advances in natural language processing, machine learning, and emotional AI (affective computing) will enable these systems to become more sophisticated and responsive to users’ needs. Future AI therapists may be able to detect subtle emotional cues, such as tone of voice or facial expressions, allowing for more empathetic and personalized interactions.

Additionally, the integration of AI with other emerging technologies, such as virtual reality (VR) and augmented reality (AR), could create immersive therapeutic experiences that enhance the effectiveness of treatment. For example, VR-based AI therapy could provide users with guided exposure therapy for phobias or PTSD in a controlled, virtual environment, offering a powerful new tool in the therapist’s arsenal.

Recommendations for Ethical and Effective Implementation

To ensure that AI-driven therapy applications are used ethically and effectively, the following recommendations should be considered:

- Regulatory Oversight and Standards

There is a need for robust regulatory oversight to ensure that AI therapy applications meet high standards of safety, efficacy, and ethical practice. Governments and regulatory bodies should establish guidelines for the development and deployment of AI in mental health care, including requirements for data security, algorithm transparency, and ongoing evaluation of therapeutic outcomes.

- Collaboration Between AI Developers and Mental Health Professionals

AI developers should work closely with mental health professionals to create therapy applications that are clinically sound and aligned with established therapeutic practices. This collaboration is essential for ensuring that AI-driven tools complement rather than replace human-led therapy, and that they are designed with the best interests of users in mind.

Mental health professionals should also be involved in the ongoing monitoring and refinement of AI algorithms to prevent biases and ensure that the systems are delivering appropriate and effective support.

- Training and Education for Therapists

As AI becomes more integrated into counseling, it is crucial to provide training and education for mental health professionals on how to effectively use AI tools in their practice. Therapists should be equipped with the knowledge to interpret AI-generated insights, integrate AI-driven support into their therapeutic approach, and address any ethical concerns that arise from the use of AI in therapy.

- Ensuring Accessibility and Inclusivity

AI therapy applications should be designed to be accessible and inclusive, taking into account the diverse needs of users from different cultural, linguistic, and socioeconomic backgrounds. This includes providing support in multiple languages, accommodating different levels of digital literacy, and ensuring that the AI systems are free from biases that could negatively impact marginalized groups.

Conclusion

The rise of robot therapists represents a significant milestone in the evolution of mental health care, offering new opportunities to expand access to counseling and enhance the effectiveness of therapy. AI-driven therapy applications have the potential to revolutionize the field by providing personalized, cost-effective, and convenient mental health support. However, the successful integration of AI into counseling requires careful consideration of the ethical, efficacy, and privacy challenges associated with this technology.

By fostering collaboration between AI developers and mental health professionals, ensuring robust regulatory oversight, and prioritizing inclusivity and accessibility, the field can harness the power of AI to improve mental health outcomes on a global scale. As AI technology continues to advance, the future of counseling will likely involve a synergistic relationship between human therapists and their AI counterparts, working together to provide the best possible care for individuals in need.

Works Cited

- Fitzpatrick, Kathleen K., et al. “Delivering Cognitive Behavior Therapy to Young Adults with Symptoms of Depression and Anxiety Using a Fully Automated Conversational Agent (Woebot): A Randomized Controlled Trial.” JMIR Mental Health, vol. 4, no. 2, 2017, e19.

- Fulmer, Rachel, et al. “Using Artificial Intelligence to Improve Access to Mental Health Services.” Journal of Technology in Behavioral Science, vol. 4, no. 2, 2019, pp. 72-77.

- Inkster, Becky, et al. “The Ethics of AI in Mental Health: A Case Study on AI-Based Therapy.” Frontiers in Psychiatry, vol. 10, 2019, article 1019.

- Johnson, Sherrilynne, et al. “Affective Computing and the Future of AI-Driven Therapy.” IEEE Transactions on Affective Computing, vol. 12, no. 1, 2021, pp. 57-71.

- Kretzschmar, Kai, et al. “The Rise of Digital Mental Health and the Role of AI: An Overview of Mental Health Chatbots.” Frontiers in Digital Health, vol. 2, 2020, article 62.

- Luxton, David D. “Recommendations for the Ethical Use and Design of Artificial Intelligent Care Providers.” Artificial Intelligence in Behavioral and Mental Health Care, edited by David D. Luxton, Academic Press, 2016, pp. 195-226.

- McTear, Michael, et al. Conversational AI: Dialogue Systems, Conversational Agents, and Chatbots. Springer, 2021.

- Park, Sanghee, et al. “Virtual Humans for Delivery of Behavioral Health Interventions in Real-World Settings.” Journal of Medical Internet Research, vol. 21, no. 4, 2019, e10928.

- Vaidyam, Aditya N., et al. “Chatbots and Conversational Agents in Mental Health: A Review of the Psychiatric Landscape.” The Canadian Journal of Psychiatry, vol. 64, no. 7, 2019, pp. 456-464.